Chatflow Now Supports GPT-4o Mini: What You Need to Know

We've recently added support for OpenAI's new GPT-4o Mini model to Chatflow. Here's a rundown of what this means for our users.

What is GPT-4o Mini?

GPT-4o Mini is a new model from OpenAI that aims to balance performance and cost. It's designed to be a step between GPT-3.5 Turbo and the full GPT-4 model.

Key Features of GPT-4o Mini

-

Performance: GPT-4o Mini scored 82% on the MMLU test, compared to GPT-3.5 Turbo's 69.8%.

-

Cost: It's priced lower than GPT-3.5 Turbo, which could lead to cost savings for some users.

-

Language Capabilities: The model offers improved performance in non-English languages compared to GPT-3.5 Turbo.

-

Context Window: Like GPT-4, it has a 128k context window and supports up to 16k output tokens per request.

-

Knowledge Cut-off: The model's training data is current up to October 2023.

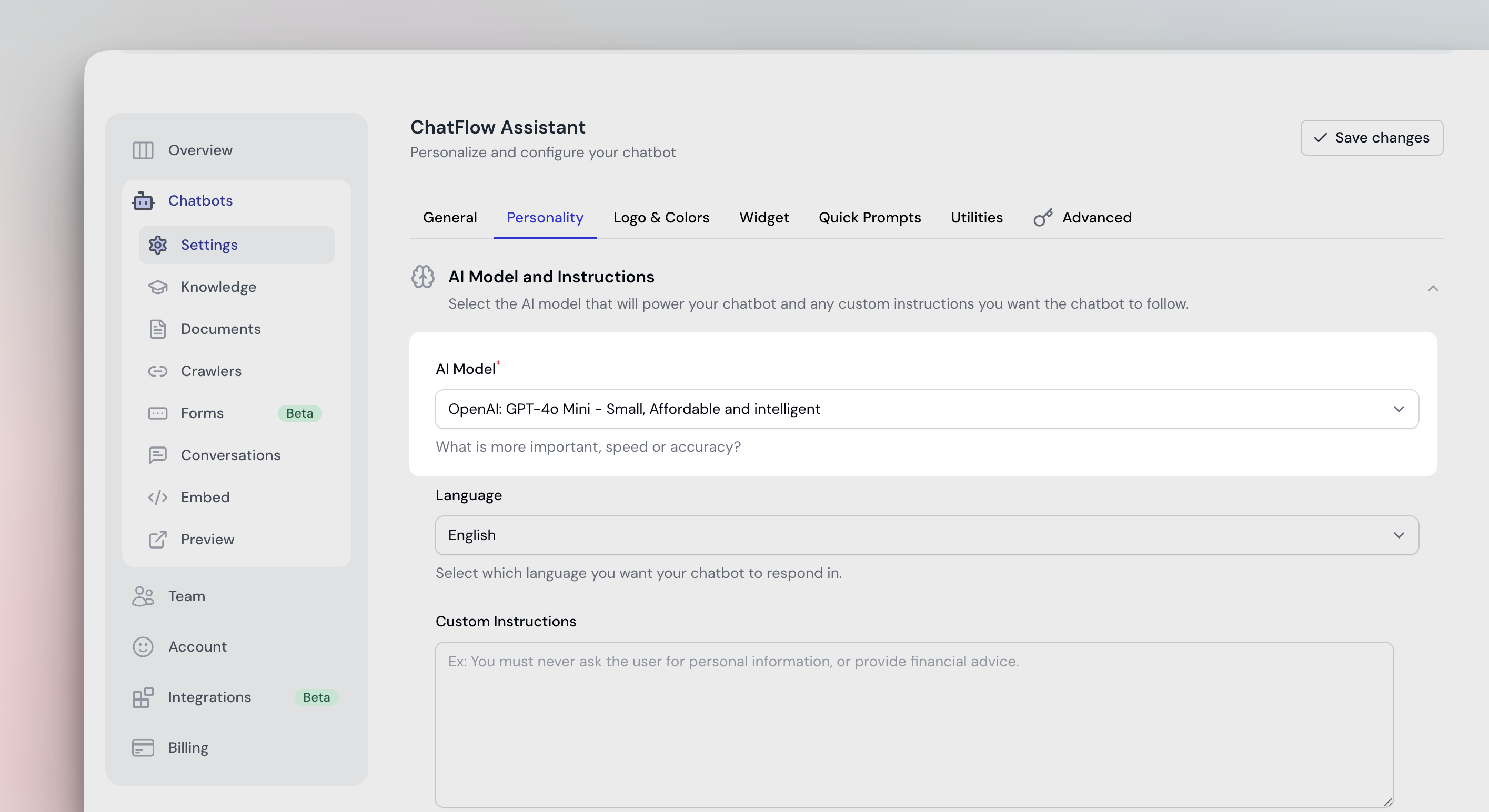

How to Use GPT-4o Mini in Chatflow

If you're interested in trying out GPT-4o Mini, here's how to enable it:

- Log into your Chatflow account

- Navigate to your Chatbot settings

- Find the "AI Model" section

- Select "GPT-4o Mini" from the options

- Save your changes

Use Cases where GPT-4o Mini might be beneficial.

GPT-4o Mini offers several potential advantages that could benefit various chatbot applications. Let's dive deeper into these use cases and explore why they matter:

1. Processing Longer Documents

GPT-4o Mini's 128k context window allows it to handle larger chunks of text, which could be particularly useful when working with extensive knowledge bases. This capability might enable your chatbot to:

- Provide more comprehensive answers by accessing and processing larger sections of documentation

- Maintain context over longer conversations, potentially improving the coherence and relevance of responses

- Analyze and summarize lengthy reports or articles more effectively

However, it's important to note that while the model can process larger documents, the quality and accuracy of its understanding may vary. Further testing is needed to determine how well it performs with different types of long-form content.

2. Handling Higher Volumes of Queries

The lower cost of GPT-4o Mini compared to GPT-3.5 Turbo could make it more feasible to handle a higher volume of queries. This could be beneficial for:

- Customer service chatbots that need to manage many concurrent conversations

- Information retrieval systems that process numerous requests throughout the day

- Chatbots integrated into high-traffic websites or applications

Keep in mind that while the model may handle higher volumes more cost-effectively, it's crucial to monitor the quality of responses to ensure they meet your standards.

3. Applications Where Lower Latency is Important

Speed is a critical factor in chatbot performance, and GPT-4o Mini's potential for lower latency could be a significant advantage. Here's why speed matters in chatbots:

- User Experience: Faster responses lead to more natural, fluid conversations, enhancing user satisfaction and engagement.

- Efficiency: Quicker processing allows chatbots to handle more queries in less time, potentially improving overall system performance.

- Real-time Applications: For use cases requiring near-instantaneous responses (e.g., live customer support or interactive tutorials), even small improvements in speed can make a substantial difference.

GPT-4o Mini's balance of performance and speed could make it suitable for applications where rapid response times are crucial. However, it's essential to test the model in your specific use case to ensure it meets your latency requirements.

4. Multilingual Applications

Given GPT-4o Mini's improved performance in non-English languages, it could be particularly useful for:

- Global customer support chatbots

- Multilingual content analysis and summarization

- Cross-language information retrieval systems

Again, thorough testing across different languages and use cases is necessary to verify the model's capabilities in this area.

The Need for Further Testing 🤔

While GPT-4o Mini shows promise in these areas, it's crucial to approach its capabilities with a balanced perspective, It's only been available for 2 days, and it will take some time before we figure out its quirks and and limitations.

-

Performance Variability: The model's effectiveness may vary depending on the specific task, domain, and complexity of the queries it handles.

-

Language-Specific Performance: While it shows improvements in non-English languages, the level of improvement may not be uniform across all languages.

-

Knowledge Base Interaction: How well the model leverages information from your specific knowledge base needs to be evaluated to ensure accurate and relevant responses.

-

Cost-Benefit Analysis: While potentially more cost-effective, it's important to weigh any savings against potential changes in performance or output quality.

-

Edge Cases: Identifying and testing edge cases in your specific application can help uncover any limitations or unexpected behaviors of the model.

However, we encourage Chatflow users to experiment with GPT-4o Mini in a controlled environment, comparing its performance against GPT-4 Turbo and GPT-4o other models for your specific use cases.

This type of "hands-on" testing will provide you with the most accurate insights into how GPT-4o Mini will work out for you (if at all).

As always, we're keen to hear about your experiences with GPT-4o Mini. Your feedback is invaluable in helping us refine and improve Chatflow's capabilities. If you encounter any interesting results, challenges, or have suggestions, please don't hesitate to reach out! 👋